Hear Node

The hear node allows the device to listen to user input. The editor flow may be branched based on which of the words was heard by the device.

There are two ways to use hear nodes. Utterances and with the new NLU model.

Utterances (old)

Utterances are the words or phrases that the device will listen for. When an utterance is matched the flow will continue down its branch.

If you put an empty variable as an utterance, the hear node will listen for any input it doesn't recognize and route the flow through that branch.

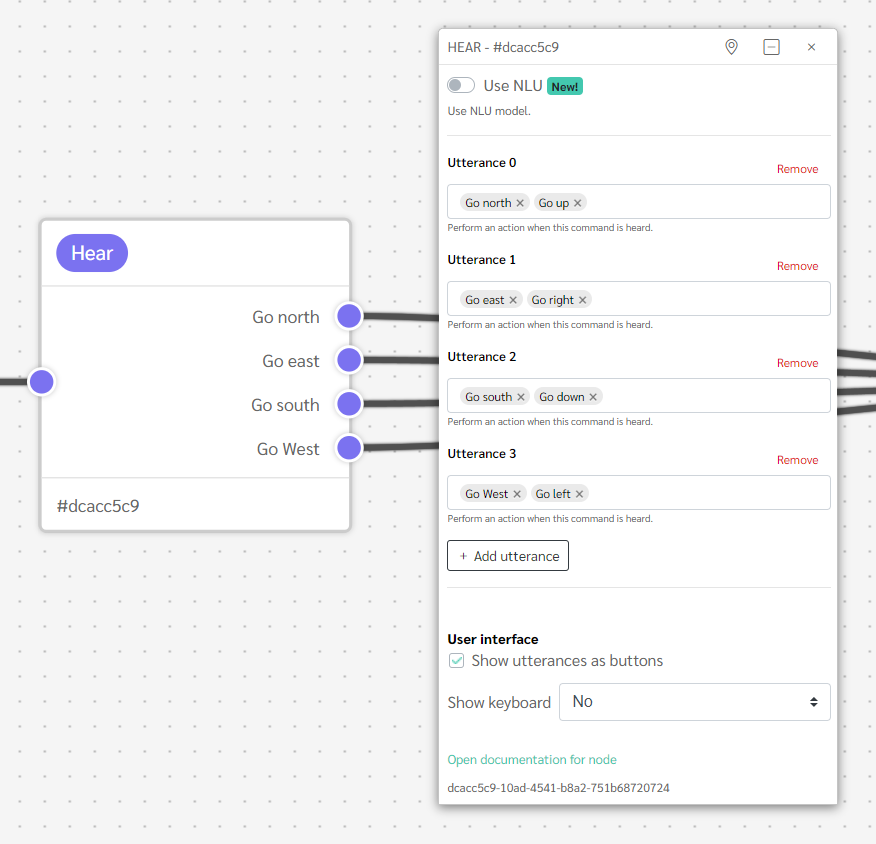

To add an utterance, write it down in the text field and either press enter or press the add button. If multiple words should follow the same editor flow, additional words can be added to a single utterance by simply adding more words in the same text field.

Matching word

The matching word is saved in the variable system_userInput. This can be useful when there are multiple words defined for one utterance and you want to know what specific word was used.

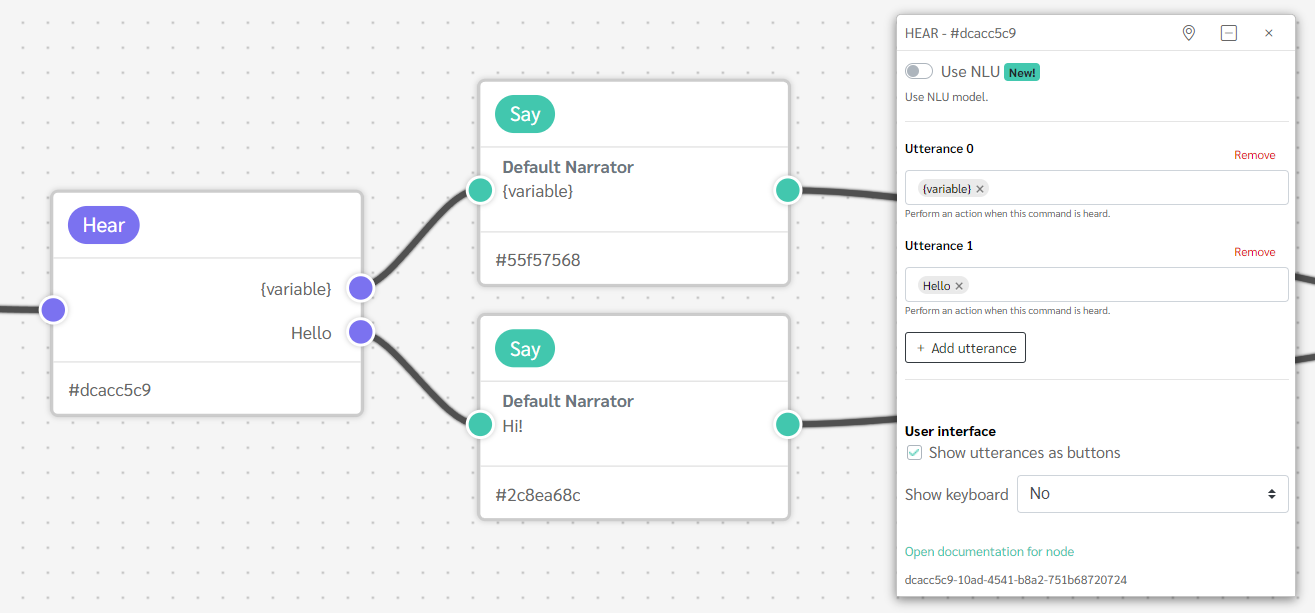

Capturing raw input

To get a raw input from a hear node, you need to add a variable to the hear node, like {variable}. To make sure that it works, it has to be the first or only utterance in the hear node. Otherwise, the engine will treat the variable as a repeat.

Both of these examples shows that you can get a variable by any input the user gives. The second examples shows how you can catch raw inputs while also having other utterances. In the case you would say hello, the engine will choose the utterance that has hello.

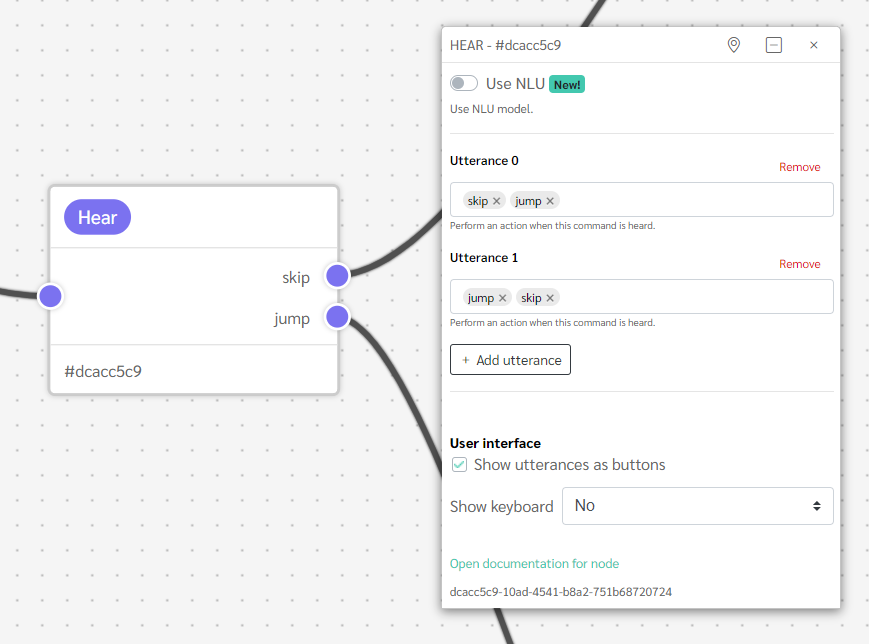

Matching behavior

If two utterances in the same hear node match each other, the engine will go to the first utterance that matches the user input.

In this example, both jump and skip exist in both utterances. If the input is either jump or skip, the engine will choose the first utterance that has either words.

Behavior when user input does not match any utterance

If the user input doesn't match any utterance in the hear node, it will play the Repeat text of the last say node that has one, then it will go back to the utterances you currently are on. If no say node has any text in their Repeat fields, then nothing will be said.

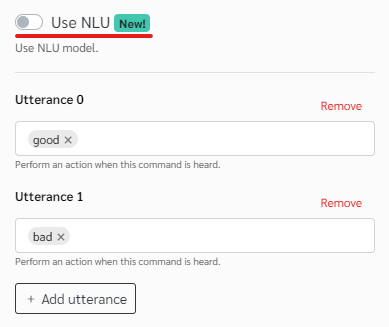

NLU (new)

The NLU model helps to improve the speech recognition.

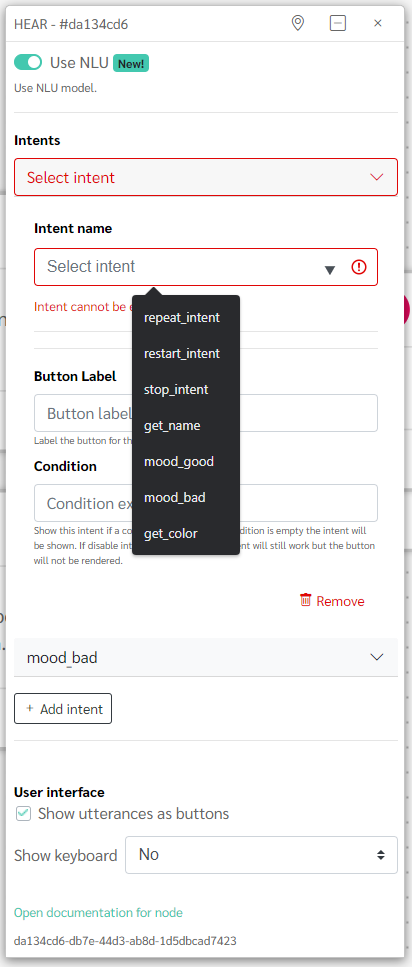

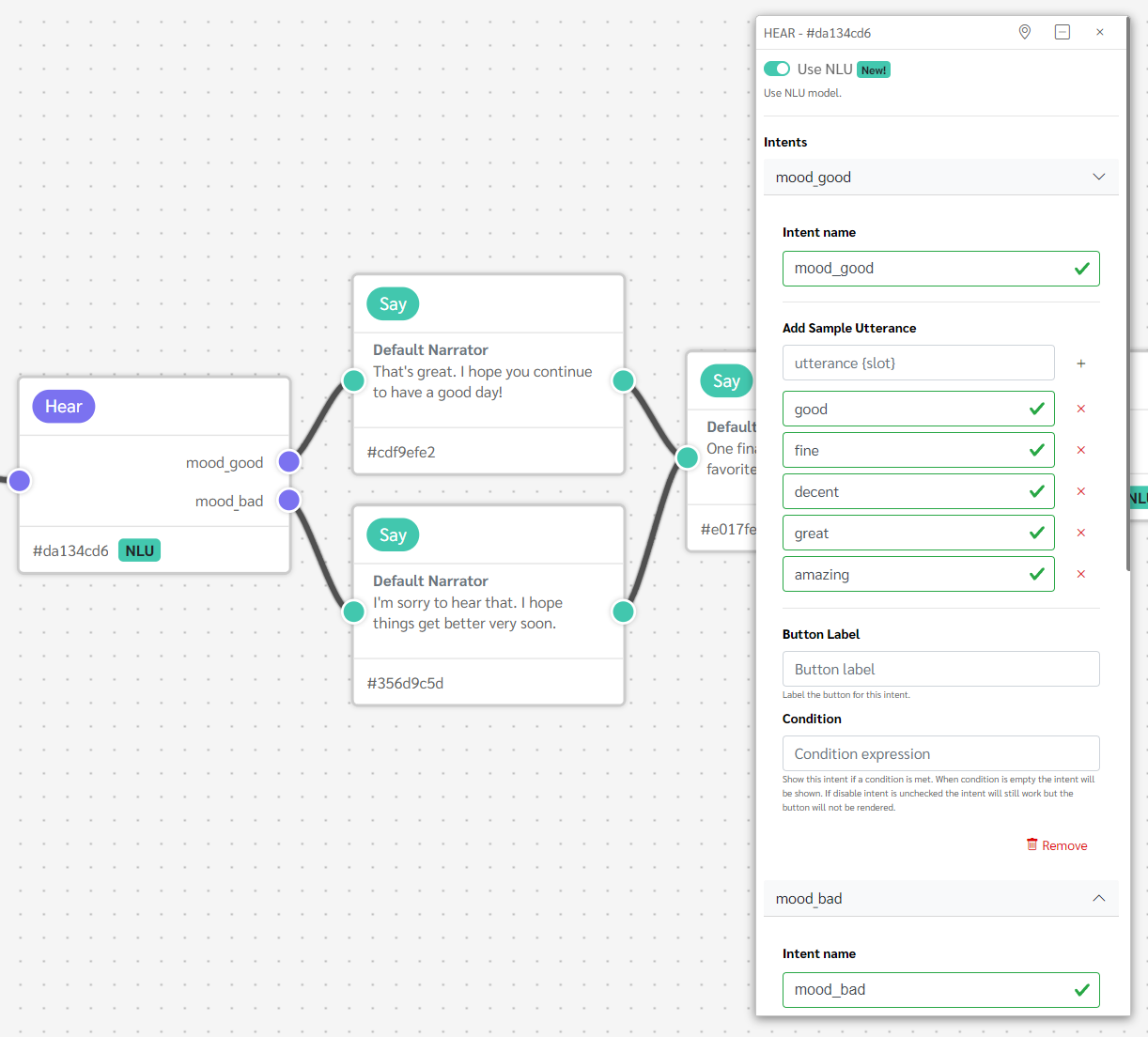

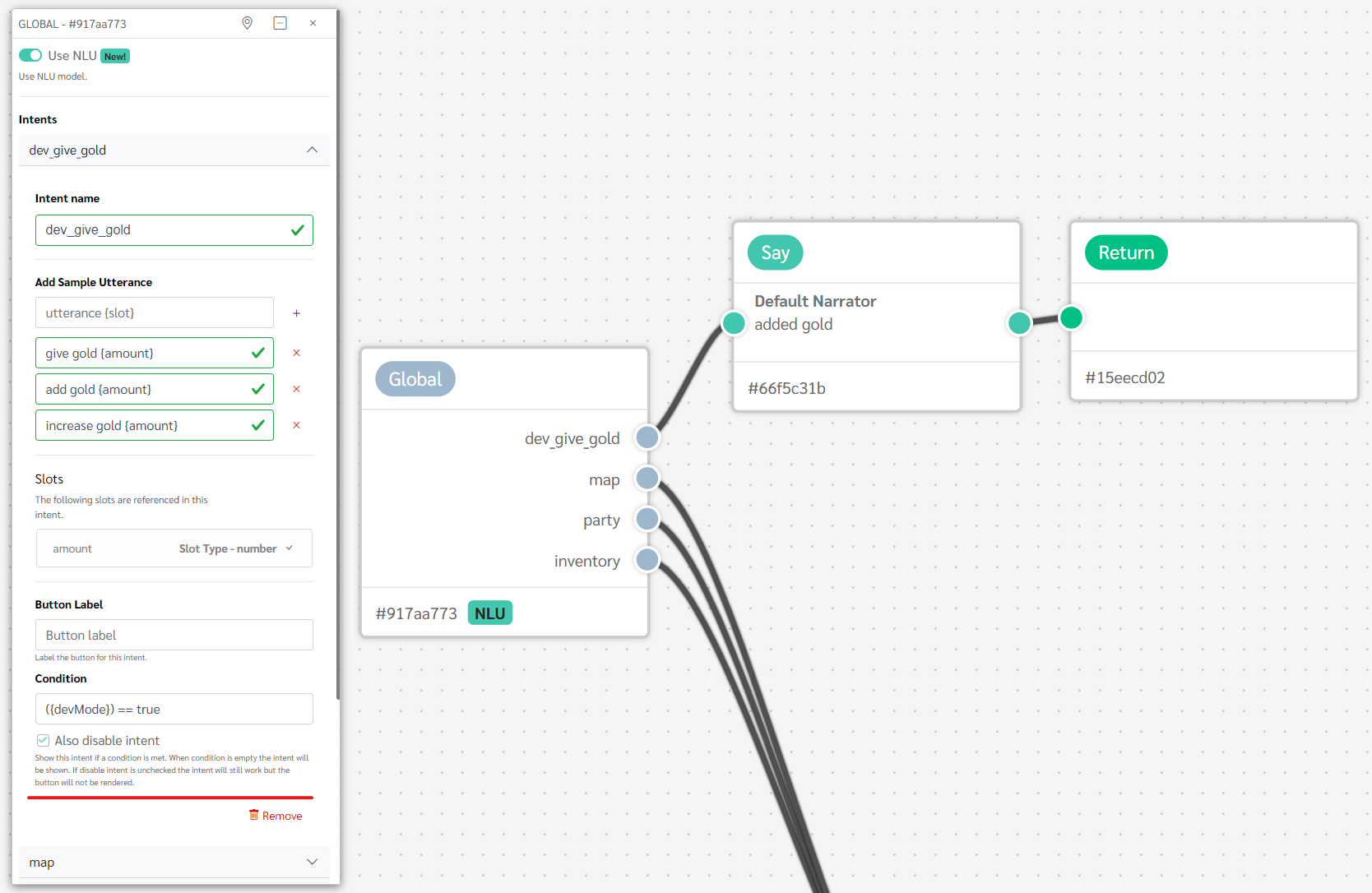

You can use your NLU model in your hear nodes by using the Use NLU toggle in the node options. When enabling the NLU in the node for the first time you may have to enable the NLU. If your hear node has existing utterances it will be populated with the same amount of empty intents. From this point you should create intents that represent the previous utterances you had.

Intents

For each socket you need to select an intent. You will be provided a list of your intents as a dropdown. If you provide an intent that does not exist you will be prompted to create it. Intent properties can be modified directly in the hear node.

When a valid intent is selected you should see no warnings. This means the intent is configured correctly. If you encounter warnings, read them carefully and ensure your NLU model is configured correctly. The warnings will describe the problems detected.

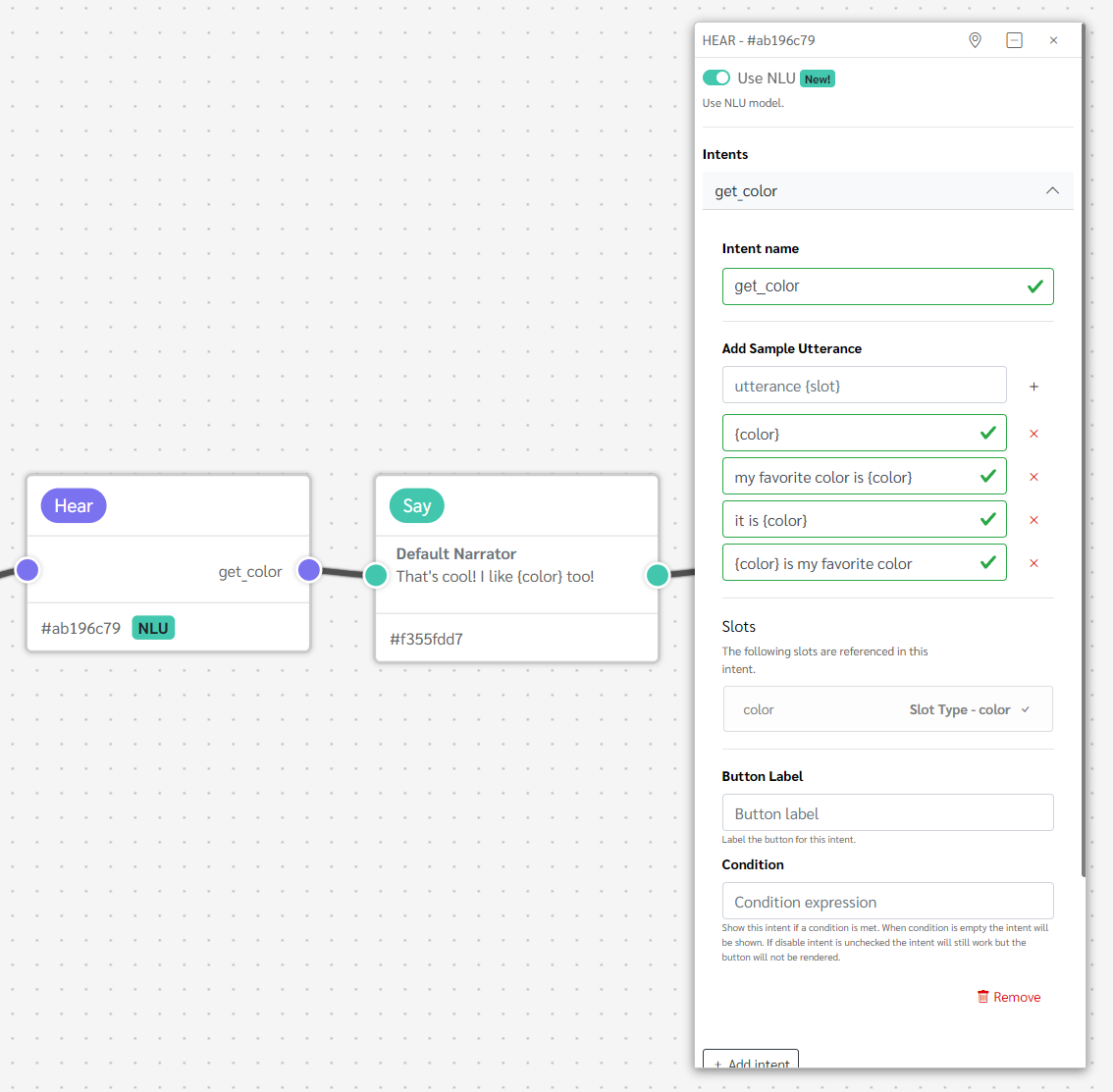

Capturing Variables

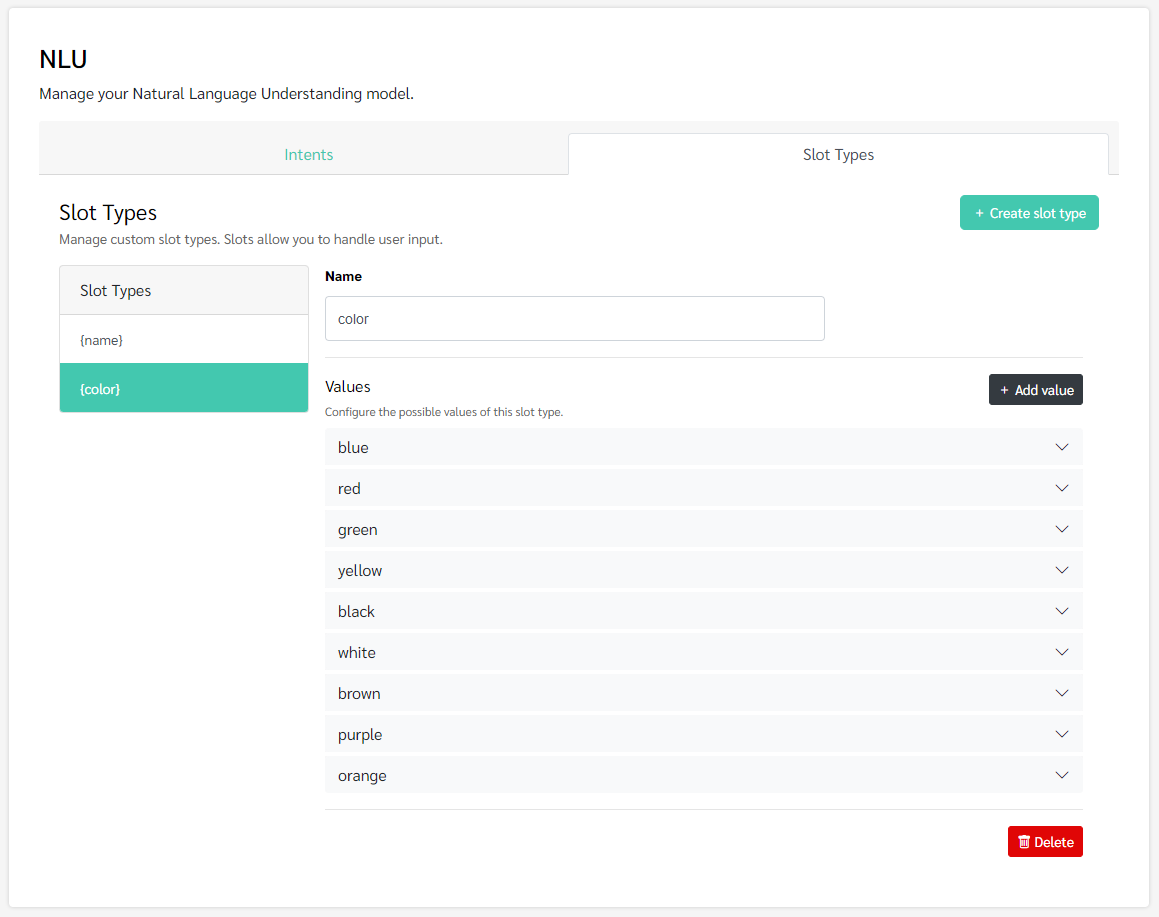

Using intents with slots allows you to capture variables. Note that at this time we do not support the capture of arbitrary values; only the values explicitly configured in your custom slot type will be captured.

Let's say for example we have an intent that we call 'get_color'. We configure the intent to contain several sample utterances that reference the color slot type, a custom slot type called 'color' containing a list of possible color values. When this intent used and a valid 'color' value is provided, a state variable called color will be created and within it will be the value said by the user.

You configure the values of the slot type in the NLU manager. Found by clicking the NLU tab in the workspace, then the 'Slot Types' sub tab.

Button Label

Label the button for the intent.

Condition

You can decide to show an intent only if a condition is met. For example, you may want intents that can only be used in development, or if a player has reached a certain point of progress. By adding a condition, you can decide if the intent is hidden or disabled entirely when the condition is met. If hidden, the button will not be rendered but the intent can still be invoked. If disabled, the intent is hidden and cannot be invoked by the user.

Options

Here is a description of the other options for the hear node

Show utterances as buttons

Control whether the utterances should be shown as buttons on supported platforms (for example the web player or apps)

Wait for audio end before showing buttons

Don't show the buttons until audio is finished playing. This could be useful to prevent the player from seeing the options too early.

Use "Time after audio end" to set a time, either before or after the audio finished playing, when the buttons will be shown.

Time limit for answering

Set a limit on how much time the user have after audio finishes playing to give an answer.

It can also be used to automatically proceed to the next section.

When the time limit is reached, the utterance selected in the dropdown is automatically used as response.

Show keyboard

Show the keyboard to allow user to enter free-form answers.